What can medicine learn from the the oil and gas industry? asks Tane Eunson.

What can medicine learn from the the oil and gas industry? asks Tane Eunson.

Unfortunately for patients and healthcare workers alike, medical errors happen. No matter how well-trained and experienced the practitioner, underneath the scrubs there still resides a human and errors will follow. However, systems can be put in place to minimise them and medicine could do well to learn lessons from other industries.

In 2012, there were 107 serious medical errors in Australian hospitals. These ranged from surgery performed on the wrong patient or body part, to surgery where instruments were left inside the patient, to medication errors and in-hospital suicides.

When considered in the context of the 53 million patient interactions that occurred that year 1, the serious medical error rate is a staggeringly low 0.0002%. However, when the type of errors are considered, like operating on the wrong patient, it’s apparent there is still room for improvement. Since surgeons are generally intelligent, highly trained and well-meaning people who don’t intentionally operate on the wrong patient, then what is going wrong to allow such seemingly basic errors to happen?

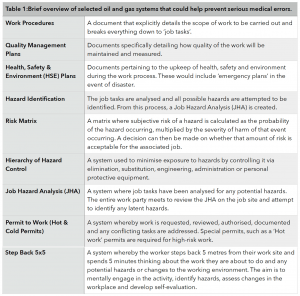

Making basic errors reminded me of me in my early twenties when I worked as a glorified labourer on offshore oil platforms in the booming Western Australian ‘Oil and Gas’ industry. The oil and gas (O&G) industry taught me many things (like becoming a doctor was a really attractive option), but it also taught me a lot about quality & safety. O&G has a huge array of structures and systems in place to improve quality and safety by minimising human error. There are ‘permit to work’ systems, ‘risk matrices’, ‘job hazard analyses’, ‘hierarchy of controls’ and ‘step back 5x5s’ (NB: brief explanations of these systems are outlined in the table at the end). Thankfully these systems are in place, because when you’re living on an exceptionally large BBQ gas bottle and your closest neighbours are sharks, If things go wrong out there, they go very, very wrong.

These structures and systems and permits are well and good for tradesmen and engineers, but what does that mean for doctors and patients? Can lessons be learned from one industry and transferred to another industry that is so vastly different?

Best-selling author and professor of surgery at Harvard Medical School, Atul Gawande, addressed this topic in his 2009 book “The Checklist Manifesto”. Gawande cites real-world examples, beginning with the US Air Force and how their pilots use checklists to find simplicity in operating highly complex machines. He provides a narrative on the 2009 US Airways Hudson River emergency landing and how those pilots used algorithmic checklists to bring the plane to safety. He cites other areas of industry using checklists to make gains; from skyscraper construction to investment banking. He posits that checklists need to be an optimal length; long enough to capture the crucial components, but not so exhaustive that the user disengages. Gawande also notes that in modern medicine our great challenge is not ignorance, but ineptitude; meaning we often have the knowledge required, but we don’t always apply it properly. His research team then integrated these ideas and developed their own checklists for surgery. He sought to eliminate human error and find simplicity in complexity while appropriately applying accepted knowledge. These checklists nearly halved the amount of surgical deaths at the hospitals they were trialled in. Halved. From a simple checklist that pilots, builders and bankers use.

So what exactly did the oil and gas industry do? How did they get there and what can medicine learn?

O&G has learned some harsh lessons from tragic disasters in it’s history. One of the most tragic was the 1988 fire on the Piper Alpha platform in the North Sea that claimed the lives of 167 men 3. At the time it was the worst offshore oil disaster in history. A public inquiry was established to determine the cause of the tragedy and they found an oversight regarding conflicting work was the main cause of the initial explosion that was the catalyst for its destruction. Furthermore, the inquiry made 106 recommendations for safety procedures to be overhauled and the winds of change began to blow in the oil and gas industry.

Almost 30 years later in Western Australia, quality and safety are priority performance indicators for O&G companies. From my experience as a contractor, I gained a unique perspective working for many different companies on many different sites and I know the systems across companies are largely the same. Like in “The Checklist Manifesto”, this illustrates that successful systems can be transferred across entities. However, of particular interest, the step back 5x5s and the permit to work system could yield improvements on error rates in many medical situations, such as transfer of care, multiple co-morbidities, and emergency retrieval.

Step back 5x5s: This is where the worker steps back 5 metres from their work site and spends 5 minutes thinking about the work they are about to do. The aim is to mentally engage in the activity, identify hazards, assess changes in the workplace and develop self-evaluation. Frankly, this is a prudent habit for all aspects of life. It is simple, cost-effective and has little downside. It helps get the simple stuff right. Stuff like making sure you are operating on the correct patient. By applying this behaviour in times of complexity, we may help avoid all sorts of medical errors.

Gaining competence in the permit to work (PTW) system involves a multi-day course, so the following is the briefest of summaries. PTW is designed to deal with complexity and consequently could be readily transferable to the medical world by dealing with the management of a complex patient. In O&G, the system functions as a number of permits associated with a particular job. A permit is required for each unique job task, with some ‘high-risk’ jobs requiring more stringent permits e.g. if the ‘job’ is ‘repair pump’, then associated permits would be required for ‘changing fluids and parts’ and another ‘high-risk’ permit required if any ‘grinding, drilling or welding’ was required to repair it. The ‘permit controller’ then oversees the permits within each job to ensure there are no conflicts and potential for harm e.g. if grinding is required, then there will be checks in place so no conflicting work, such as replacing combustible fluids, occurs during grinding due to the risk of explosion. There are a series of other cross-checks by ‘area controllers’ and the entire work party must ‘sign-on’ that they understand the permits before work can begin. Transferring this to a medical analogy, a ‘job’ could be a patient and each permit could be associated to a team’s management e.g. cardiology, psychiatry, social work etc. The ‘permit controller’ would be the consultant, ‘area controller’ would be the senior registrar of the attending team and the ‘work party’ being junior doctors and allied health. Having distinct and obvious representation of management from multiple parties or pathologies, that is checked and cross-checked in a formal manner could prevent a vast number of medical errors.

However, when there is change, there is inertia and I can hear the naysayers groaning “not more paperwork!” In fact, Gawande surveyed his colleagues who had used the checklists, the same checklists that halved surgical deaths, and 20% of them said the same thing; checklists weren’t easy to use and didn’t improve safety. However, when asked if they would like the checklist used if they were having an operation, 93% of them said they would. People don’t like change, even if it works.

Resistance to change can also be due to the culture of a workplace. O&G knows this and they work hard to promote a safety conscious culture. From your first interaction with an O&G company you will be bombarded with safety slogans like “safety starts in the home” and “everyone home safe everyday”. Without this almost indoctrination of safety principles, I have little doubt the industry would have seen the improvements it has.

Therefore, the culture of our hospitals, and the professions within them, would need to be honestly evaluated if there was to be a meaningful shift and change in behaviours to further reduce errors. Whether the medical industry is ready for that shift is another debate entirely. However, it is heartening to know that there are great examples of successes in other industries and that by learning from them, we could extinguish more medical errors.

References

- Australian Institute of Health and Welfare (2013). Australian Hospital Statistics.

- Atul Gawande (2009). The Checklist Manifesto.

- Terry Macalister (2013). “Piper Alpha disaster: how 167 oil rig workers died.” – The Guardian.

Dr Nikki Stamp (2016) “How Mistakes In Hospitals Happen” – Huffington Post.

Tane Eunson is a post-graduate student doctor from Perth. This blog was originally published on Life in the FastLane.